Project/Paint from View

Summary

Ok, so a revamp of the GoingInBlind project idea I was testing with Godot and Unity way back when. Simple premise, you walk around in a pitch black environment, using something akin to scanning or echo-location to guide you…

Those projects use a intersection-type shader with the depth pass to achieve a line scanning across the environment with an instanced mesh that spawned on the player location.

I wanted to try and re-create the effect using particle systems in Godot, this is kind of possible, but not great, mostly due to relying on Godot’s particle physics, which seem a bit lackluster, in comparison to Unity. Maybe Godot’s more performant? but I dunno. Either way, Godot relies on simple geometric shape nodes for collison, a heightfield, or a baked SDF, which works but requires baking and also only supports static geometry. This doesn’t please me, I believe Unity can handle complex and dynamic geometry by default, but no such solution yet for Godot’s particles.

Either way, that would be a poor method anyway, I went back to thinking about using shaders, which should be far more performant anyway, and most importantly, should support dynamic meshes since it’s shader based rather than particle-collision based.

https://github.com/ZestfulFibre/MultipleImpactShield

I found this, which is a super simple project in Godot using a script and a simple shader that creates a “multiple-impact shield” effect. In it, you click on a mesh, the script on the mesh object provides collision point data and decay_time, and supplies it to the Shader.

The shader then just creates a series of arbitrary shapes (circles) based on that given impact_position from the script.

This works well, is simple, and seems simple to expand. In fact, I’ve done some test, and modifying, with great help with ChatGPT. I understand the shader a bit more, I’ve changed the default behavior from an expanding circle to a shrinking circle/square.

Now, it’s time to test if instead of mouse_click, I can get a ton of raycasts to supply the collision points, and see how performant and complex that is.

Finally, testing with complex geometry, levels, and dynamic or skinned meshes.

If everything works well, this should be a really project I can pursue.

Furthermore, an indie-game LilAggy was playing (called Voidness?) was a game of the same type, a scanner that placed squares on the environment, but also the squares changed color based on what they hit. This should also be pretty simple to replicate since this is now shader based, so different objects will just have a unique version of the shader, with a different color, or even other different unique things!

This game, Backrooms game, has this short section. Based on what I can tell, it’s similar to the voidness, where it casts a “scan” from the camera perspective/viewport, but however, it noticeably has some jitter and randomness to how the points are placed on the environment…

This leads me to believe this isn’t a shader, but indeed, rather some kind of Particle system that projects onto invisible mesh geometry, then adds some random variation to it’s exact positioning.

The Voidness, the game LilAggy played seemed much more like little squares being painted onto geometry, and the geometry was definitely more complex, so I think that solution was indeed a shader, could be wrong though.

So yeah… I’m starting to think this isn’t a shader, but rather some sort of GPUParticle system and/or just manually instanced points, possibly using object pooling, and they’re maybe raycast thounsands of times, following the perspective of the camera, placed onto level geometry, then given slight positional offset.

Then colors could be dynamically chosen based on what “collision” geometry it hit, to highlight certain objects.

Hmm. Maybe, instead… Just grab all geometry within the camera’s view frustrum, instantiate thousands of points onto that geometry randomly, then delete any points not within the camera’s view frustrum.

I imagine this should achieve pretty much the same effect, and much cheaper since it’s not necessarily doing thousands of calculations to get a specific point, rather just placing points at random, then removing points outside view frustrum.

https://stackoverflow.com/questions/12023249/3d-view-frustum-culling-ray-casting

https://discussions.unity.com/t/stoping-gameobjects-out-of-frustum/580860

https://docs.godotengine.org/en/stable/classes/class_visibleonscreennotifier3d.html

The progress so far…

Gore System

Okay, so I’ve got something working in both projects (Cleaner & The Pit).

The Cleaner uses the “Cleaning” script that CodeMonkey made a rough tutorial overview for Unity, and also a separate more detailed full process code from some random Youtuber, at first it seemed to work weird, but after fiddling with the code according to some commenters, as well as adjusting the “Brush” size down to 16 pixels, and only using a simple plane mesh, I’ve now gotten something that works perfectly.

It just draws onto a mesh, using the brush as a mask/texture, and uses the collision hit data to determine where this texture is drawn. The texture is a separate Mask texture, that blends between a Clean and a Dirty version of the base textures.

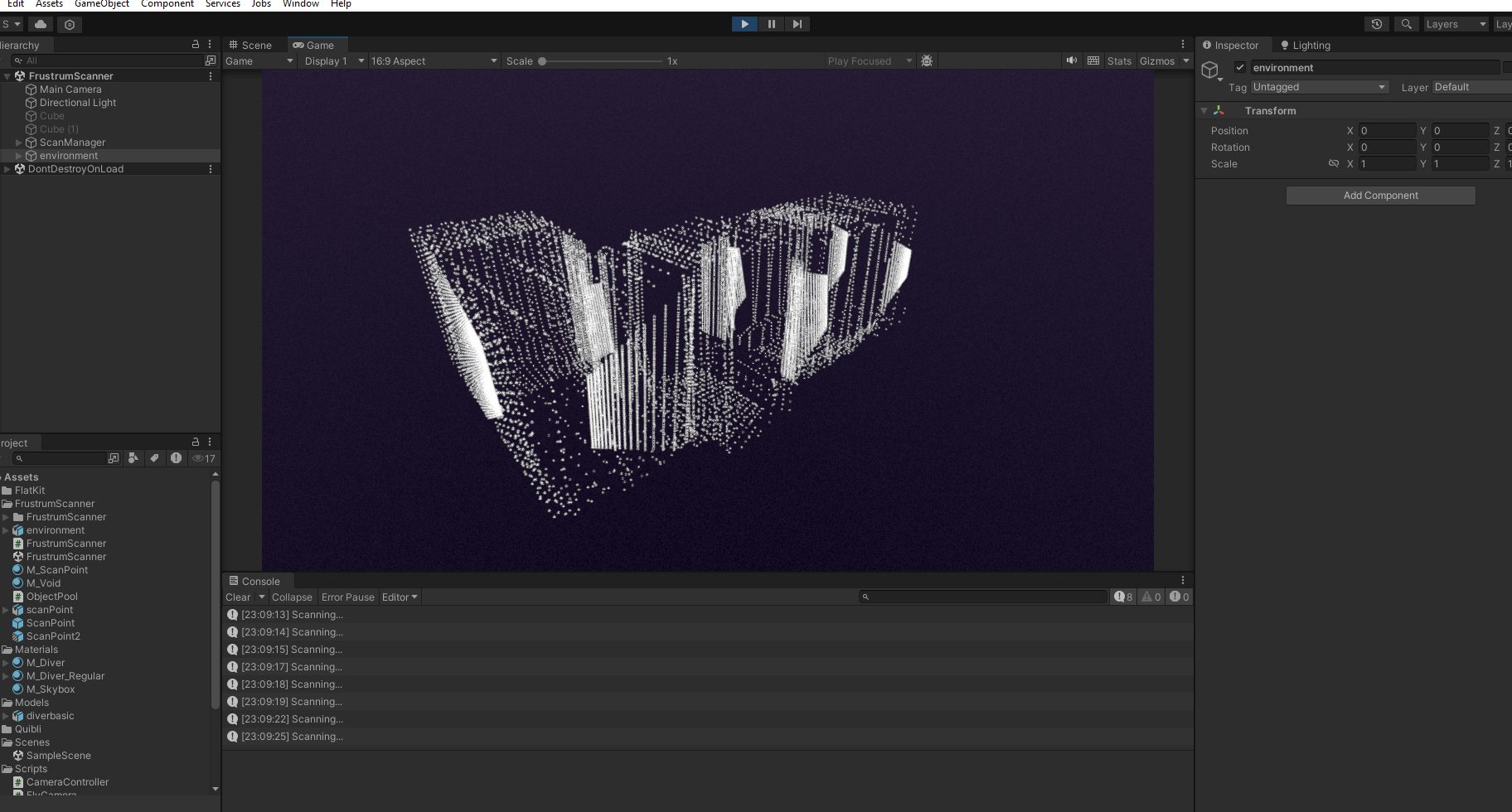

The Pit, on the other hand, shoots rays, based on an arbitrary resolution size, across the entire camera view frustrum.

We hit collision objects, grab the hit point, and use that to instantiate small scanHit gameobjects.

The scanHit objects, currently, are a simple Mesh with a mirrored “pyramid” shape, only about 8 triangles, and a mesh renderer that renders a simple white unlit shader.

This then pulls from a separate “PoolObject” script that just instantiates an arbitrary amount of objects, I think currently it’s set to 50,000. This means there’s no spawning/deleting everytime you click, rather it just resets visibility and position.

Now, it may be beneficial to clean up, and export both sets of meshes, materials, scripts, etc to separate UnityPackages to be as clean as possible. Then, import both into a fresh new project, and really stress test to see what is more performant.

Both would seem to use a series of raycasts from the Camera to hit mesh objects and colliders, but one places gameobjects, while the other simply modifies the material.

Wait a minute, the Cleaner version uses specific UV unwrapped objects, as well as a Mask texture. This should be able to be unique to separate game objects, however, this would mean constantly modifying possibly 100s of materials, for 100s of unique mask textures in memory, for 100s of game objects…

The Pit just places simple game objects with shared mesh data and shared material data, and no texture lookups…

Gore System?