Jesse Zaritt Performance Animation

Summary

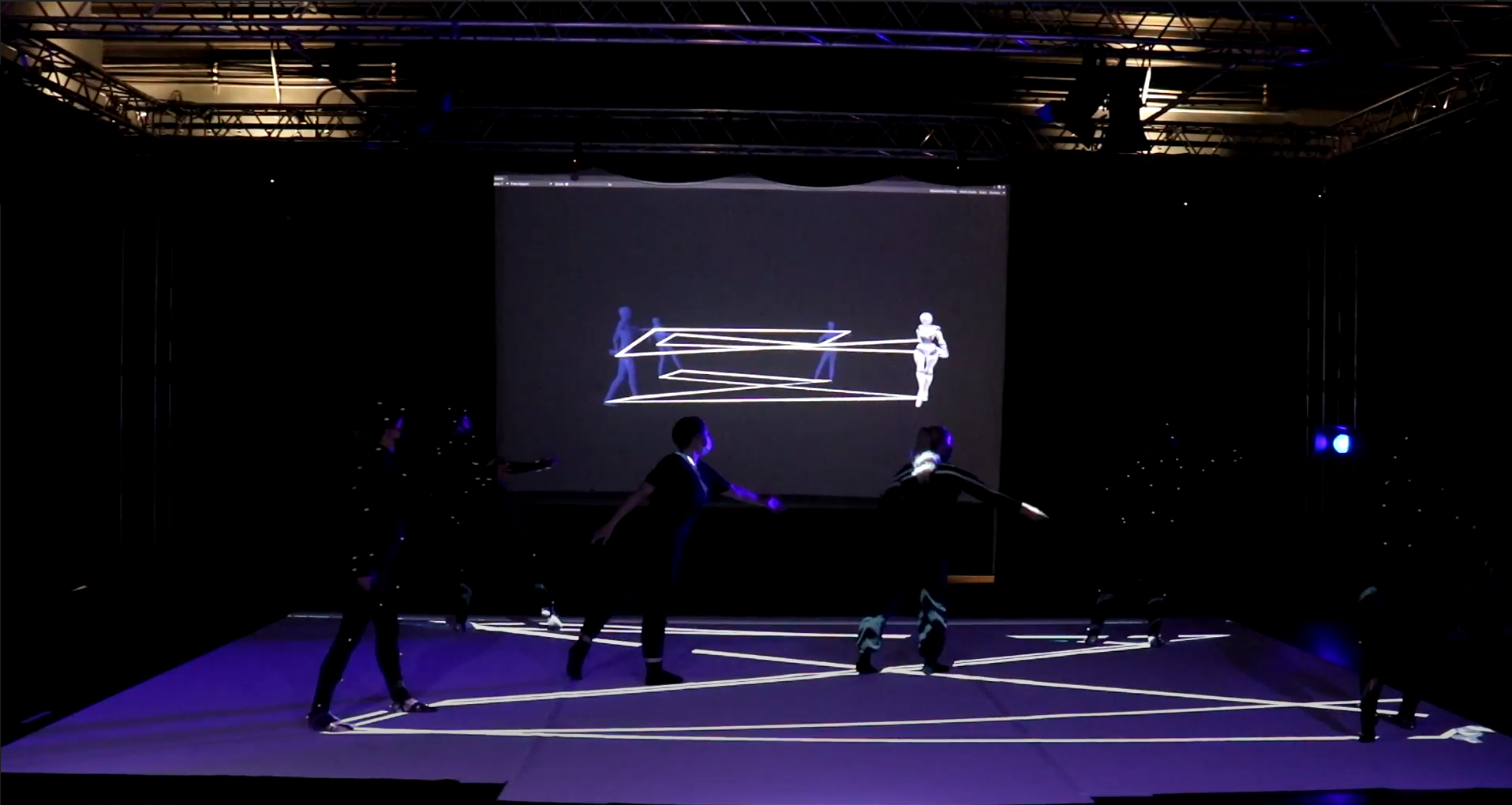

This quick project saw us creating a simple animation to be projected on a scrim behind a dance performance. We worked closely with Jesse Zaritt, a dance instructor for the School of Dance here at UArts.

Jesse was inspired by the motion trails created by a performer that we would visualize to him in our QTM software, and came up with an idea based around that aspect, exploring space and architecture as created by our bodies when we move around.

The 3D aspect of the final animation I worked on utilized the motion trails of various performers to create these spindly lines that would grow and evolve during the course of the video.

Sometime back in September, a little after the new school fall term started, we met early on with dance professor, Jesse Zaritt, to discuss a collaboration for a dance performance he was planning to present by the end of the semester.

At first we didn't know much about what the performance was or what the end product would be, I don't believe Jesse did either, but he did know that he liked the motion trails that QTM can display after a capture is recorded.

With this in mind, Jesse would have 2 to 4 of his students and performers come in once a week to don the mocap suit so we could record their performance. The idea being that whatever the final product for the presentation would look like, it would likely involve the motion capture data we had saved.

During the course of the next few months, we would go on to capture about 15 performers.

At the same time, we were looking to hire an animation student for Work-study at the CIM to work with Jesse on this project. For a while, we never got any responses back, and we were running out of time. I then worked with Jesse a bit to get the ball rolling, and creating some concepts based on the ideas of space exploration and architecture created by the motion trails of performers.

I also sent Jesse screen recordings of each performer with full motion trails, while orbiting around the viewport to get a sense of the space from many angles.

The 2 videos below are one concept of using the motion line created by the movement of a specific joint to create this appearing path that would grow or emit particles off of it. One video follows the path along in a rollercoaster type fashion while the other just orbits around to get a exterior view of the path.

The video below on the left is another quick concept I did where the path would be visualized with many instances of some geometry, and the geometr would fade and de-materialize as the camera flies close.

The video below on the right is an example of the recorded captures that would be sent to Jesse.

The process for creating these motion trails in a 3D DCC is a bit more involved and frankly tedious. Our QTM software has the ability to visualize the motion trails, but I don't believe there's any way to actually export the data, so the motion paths have to be created in other software. I'd worked out 2 methods for generating these motion trails, 1 method for Blender, and the other for Maya.

The method for Blender is quite a bit simpler in my opinion, though there are 2 hang ups that make the process a bit rough. Despite these hiccups, once you have a handle on the whole process, I think it can be a bit simpler, mainly due to Blender's curve objects, and the built-in functionality to extrude them out to create geometry.

Maya's process is still pretty easy itself, and there's no large hiccups that occur, unlike Blender, however extruding a path along a curve to create geometry, especially when the curve is noisy due to being based on motion capture data, take's a bit of tweaking with a bunch of different settings across a variety of tools. All in all, the process isn't too bad, mainly tedious, and I'm more comfortable working in Blender when I get the opportunity.

I will proceed to go more into the process of how doing this works in a rough overview, since the entire project hinges on the idea of creating motion paths/trails from an object's movement in space.

Starting with Blender, and it's 2 primary issues that make this workflow a bit unappealing.

First: The .fbx file that QTM outputs by default does not work well in Blender, at all. Now, Blender's never been great at importing FBX's created in other DCC software, mostly due to the closed nature of the file format due to Autodesk owning it. It's certainly gotten a lot better over the years, especially if using addons, typically the bone rotations are messed up due to differences in left vs. right world alignment and what axis represents the forward, and what represents the up.

This isn't much of an issue if all you need to do is just visualize the animation, and work around it. However, in this instance, the FBX that QTM spits out has completely broken visuals, something to do with scale I imagine, but I've not been able to fix it. If you look on the right, you may see nothing, that's because the bones come in as infinitely small dots, making them impossible to visualize on their own. Frankly, the only solution I've found is to take the FBX file into Maya, where it imports correctly, then take that and export it out of Maya and then into Blender, which at least gives us a semi-decent armature that's far easier to work with and visualize.

Here, this is an example of the armature of the mocap data that's been first imported into Maya, then exported out to Blender. As you can see, the bone orientations are messed up, but this is clearly a much better results than an essentially invisible rig.

Now, this is a pretty large issue, because it essentially relies on going to Maya first before going to Blender, which kind of defeats the purpose of going to Blender at all if it can all be done in Maya.

Technically, the animation data for the rig still exists in that first image, and the process can still be done, it's just a bit more of a pain in working with because visual representation is essentially non-existent.

Secondly: Blender, from my limited research time during this project, does not have any simple or easy to automate process for creating curves from an object's movement. In Maya, there's a couple of methods I believe, the easiest being the "Animated Sweep" function in the Animation workspace, which I'll go more into detail later.

This was quite the annoying discovery, as Blender has built-in functionality for visualizing motion paths for both animated objects and armatures, the problem is that there isn't any built-in functionality to covert these paths to curves or anything similar.

The solution I've found so far was a random blog post that posted python code that could be run in Blender's Scripting Workspace, this was quite the godsend, and allowed me to continue using Blender, and I wouldn't have with out it.

Future Joe: I am completely unable to find the original blog post/site which had the working script that I had used. However, I've found a page on Blender stack exchange which claims to have a working version.

I cannot promise the script there works, but it seems like it should. Essentially, I believe the script works by instancing points or maybe vertices periodically along the motion path of a given object, then loops through all of them sequentially before creating a new nurbs or curve object using those points as control points.

That said, it seems like it may actually rely on a particle system to generate new points, where as the original script I used seemed to do everything required.

The code itself is pretty simple, it relies on the grabbing the Motion Path created by an animated Armature object, sampling it and instancing a series of points along the Motion Path, then connecting them all via curve object. (With the advent of Geo Nodes, this whole process may be easier and more customizable, but I have yet to test my hand at it, so we stick with the script.)

Note: There is a difference between Object based Motion Paths, and Armature based Motion Paths. This is important as the script specifically requires the Motion Paths created in the Object Data Properties menu (the menu with the little green guy, only available when an Armature is selected), rather than the Object Properties menu (the menu with the orange square that's available on most objects).

This other small hiccup had thrown me for a loop for a while until I realize that their were 2 different menus to create Motion Paths from that look the exact same (thanks Blender).

However, will this finally solve, the process is solid. It broadly goes as such...

Select whatever joint you'd like to create a motion trail for, an parent a single Bone Armature to it.

Select that bone armature that now has animation due to it's constraint, and create a Motion Path in the Object Data Properties, be sure to create the trail using the Heads as the Bake Location as this is part that's constrained.

With that visible and the Armature object selected, simply run code. That's it, it should create a new curve object that follows the Motion Path.

Now you select that curve, go to the Object Data Properties, and simple increase the Depth under Bevel submenu.

Done, you now have a tube that follows along an animated object's path.

Quite a bit of thought and work involved to create something so simple, especially considering that the functionality already exists, the data just isn't usable beyond victualing a motion path, unfortunately it is what it is. That said, once you've gotten the process down pat, it's pretty simple and easy to run through for a series of paths, or for automating.

For Maya, the process is essentially the same in thought, with a few more tools in order to create a clean motion path. No big hiccups like in Blender, though a fair bit of tweaking will be needed with the various tool options in order to get a clean tube, in my experience.

Instead of an armature, parent a Nurbs Circle to any desired joint.

Use the Animated Sweep, this will create a rough Nurbs Surface that follows along the whole animation path. It will intersect and flatten out at various points based on the Circle's initial starting point and rotation, and the animation.

To fix this, go into Isoparm selection mode, and selection one of the Isoparms that run's the whole length of the tube, and Duplicate Curve Surfaces in order to duplicate and separate it from the initial Nurbs Surface, which you can now hide or delete.

I recommend cleaning up this new curve surface using the Rebuild tool in the Curves menu, and tweaking the settings to your liking to get a relatively clean and even curve that still follows the path. I typically set the Rebuild Type to Curvature.

Now create a new Nurbs Circle, and snap it along the curve path and place it at either end point.

Under the Surfaces menu, select both the new Nurbs Circle and the Curve Path, and do an Extrude. In the options menu, I found most of the time that Style: should be set to Tube. Result Position: to At Path, Pivot: to End Point, and Orientation: to Profile Normal.

This should give a similarly clean tube that follows the path of the animation pretty closely, but in my experience some more tweaking may need to be done to certain settings, as well as occasionally deleting history to fix seemingly random errors. Due to this, I find it's a bit harder to run through quickly or automate.

That's the basic idea behind the whole workflow to this project, was to create geometry or motion paths from joints of a performer's capture.

It was around this time, late October to early November, that time was quickly running out. I was the only person working on this at the time, yet I couldn't dedicate all my work time as we have other on-going projects that you can see all around this blog site, and due to certain rules and restrictions, Jesse wasn't allowed to pay me out of pocket to work on it on my own essentially as freelance.

Fortunately, just a few days to a week later, we finally got a response from an Animation student, who was interested, Nikki Hartmann. Alan and I sat down and met with her and Jesse, and brain stormed ideas for a final product that we could feasibly finished in roughly 2 weeks time. Also a deciding factor, Nikki knew a bit of 3D modeling and animation with Maya, but was still only a Junior student and had a primary focus in 2D animation, and I was in the process of moving out of my current apartment and looking for new places. Due to this, I knew I would be gone for at least a week, so whatever we decided, needed to be something within Nikki's current skillset.

Eventually Jesse decided on 2 parts for the final animation/video.

The first part was an 8-10 minute "atmospheric/weather" effect, based on something he saw in a similar performance from someone else. This was pretty simple to do in After Effects, which Nikki had experience with, so with some images of paint splatters that Jesse wanted to make use of, we gave those to her and set her on her way.

The process by which Nikki created her part of the animation was interesting, we took the motion data from the performers again, this time constraining the Maya camera to the hip of the performer, this could then be exported straight to After Effects with all it's animation data. Using this, Nikki would attach one of the various paint splash images, have it follow this motion data from a flat perspective, while use various stretch and distortion effects as well as fading opacity.

The second part was 6 minute animation from yours truly, to create a more 3D animation based on the motion paths, and explore the it's space.

It would start with a 4-5 minute build-up, where 1 motion path would slowly appear, and stretch and displace, to create almost a "rift" type effect. The camera would be locked from above so it appeared 2D.

At the last 2 minutes, the camera would finally pan down and we would start to get the 3D perspective of the path, additionally, more motion paths would start to appear twisting around based on more joints by a performer.

The rendering for this was a bit rougher than I expected, firstly, it was a bit hard to visualize as Blender started chugging with all the dense paths, along with various modifiers, effects, and a geometry node group that would do a disintegration type effect. This, plus the 6 minute run-time at 30 fps lead to a much, much higher render time then I initially envisioned. In my own experience, most animations I've done were maybe a minute at most, typically being around 10-30 seconds, at 24 fps, so I guess I didn't have a great concept of how long it would take.

When I got around to finishing the up the main part of this, with all effects, I started rendering.

Each frame only took about 1.5 seconds on avg/frame, using Blender's Eevee. Not too bad, right?

Well, the total amount of frames came out to a whopping 10,800.

"6 minute animation * 60 seconds per minute * 30 frames per second = 10,800 frames"

Then,

"1.5 seconds per frame * 10,800 frames = 16,200 seconds / 60 seconds in a minute = 270 minutes / 60 minutes in an hour = 4.5 hours total render time"

That's a not insignificant amount of time to render, and by this point in time, I had about ~ 2 days to finish and get something to give to Nikki for finishing and polishing.

The idea was to render out all these motion paths as white masks with a black background, that way, she could easily take the footage into After Effects, and use the same paint splash images as the weather system, using the white masks, to get those same colors warping and flying around.

The finished concept can be seen below:

All things considered, I feel it turned out pretty decent.

Now as mentioned previously, this was essentially to act as a mask video for compositing in After Effects by Nikki. At the end of the video, we get up to 6 different motion paths. If rendered out as one part, it would look a bit bad, as if an image was overlaid on top of the white, it would be the same image for all of them, and frankly not look right considering they're all different paths that extend out from each other.

To fix this, we wanted to render out passes individual for each path, this way Nikki would have more individual control over each of the elements.

This was pretty simple to set up with Blender using the Render Layers in the Compositor.

The caveat to this, was each layer had to render separately, each of the 6 elements, which meant the render time increased significantly...

Fortunately, all these layers don't appear until after the build-up occurs, around the 4 minute mark, which meant we could get away with rendering just one render layer for 5 minutes, and saving the rest of the other render layers for the last minute.

When rendering each of the layers for the last 2 minute, the average render time jumped up around 10-12 seconds per frame, to go over the math again...

"10,800 frames - 7,487 frames (the starting frame number for the 4ish minute mark) = 3,313 frames"

"3,313 frames * 11 seconds avg/frame = 36,443 seconds / 60 seconds/min = 607 minutes / 60 minutes/hr = ~ 10 hours total render time"

Just by rendering out separate passes for each motion path, without adding any extra geometry or details, the total render time more than doubled. And that's only for the last 2 minutes, compared to the ~4.5 hour render time for the whole 6 minute sequence with only 1 render layer.

Needless to say, I severely underestimated how quickly seconds added up, and I had to make sure I got everything right as much as possible in the first pass, because I literally did not have enough time to make changes and render it out again.

Somehow, I managed. The concept seemed good, only made a few small tweaks, I did have to do a few renders, but being at the CIM, I was also able to take advantage of the 3 workstations, and split the whole sequence into 3 parts to each computer. When everything was done, I let them run all night, and had the finished product.

That essentially finishes off my role in this project, I took the mask videos and handed them off to Nikki, who did a great job compositing them together in After Effects, along with the 8-10 weather/atmosphere video she did, Jesse, Alan and her met and discussed small changes that could be made within After Effects without the need for re-rendering the 3D content, and the project was finished just in time for Jesse's presentation.

You can see the final finished product below: